Deep Learning is one of the most exciting and transformative fields in artificial intelligence (AI). It has revolutionized how machines perceive, interpret, and interact with data. From image recognition and natural language processing to self-driving cars, deep learning is the backbone of many modern AI applications. In this article, we will explore deep learning in depth, including its definition, underlying principles, techniques, applications, and future potential.

What is Deep Learning?

Deep learning is a subset of machine learning, which is, in turn, a subset of artificial intelligence. It involves the use of artificial neural networks to model and understand complex patterns in large datasets. The “deep” in deep learning refers to the multiple layers of processing involved in learning data representations. These layers allow deep learning models to extract hierarchical features and build sophisticated representations of the data, which leads to improved accuracy in various tasks.

Neural Networks: The Core of Deep Learning

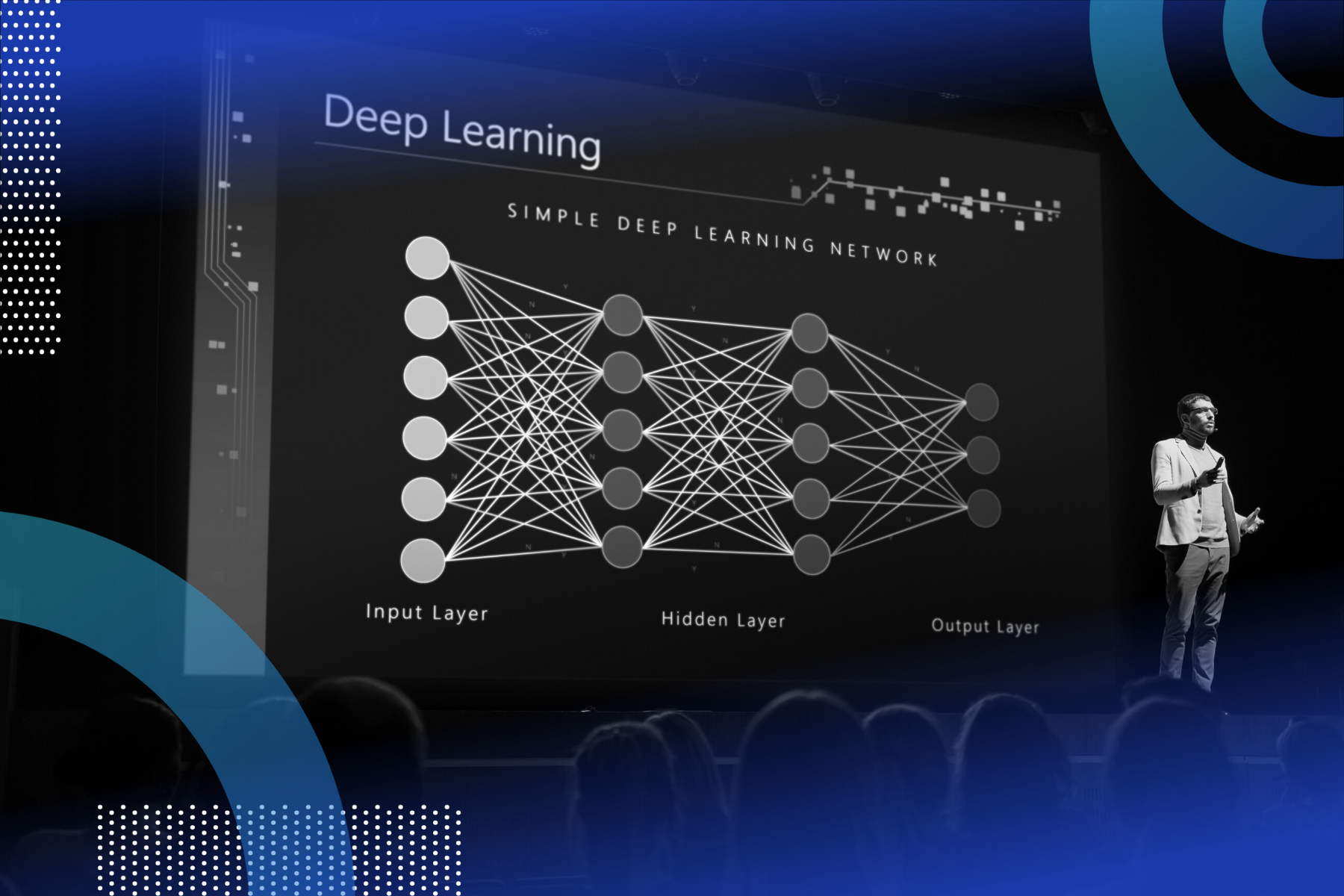

At the heart of deep learning are artificial neural networks (ANNs), which are computational models inspired by the human brain. Neural networks consist of layers of interconnected nodes, or “neurons,” which process input data and transmit information. The most common type of neural network used in deep learning is the feedforward neural network, where information flows in one direction from input to output.

Deep learning models often feature multiple layers of neurons, hence the term “deep.” These layers allow the network to progressively learn more abstract and higher-level features from the raw input data, making them extremely effective at tasks such as image and speech recognition.

Key Concepts in Deep Learning

1. Artificial Neural Networks (ANNs)

An artificial neural network is a series of layers of interconnected nodes. Each node processes an input and passes the result to the next layer. The layers can be categorized into three types:

- Input layer: This is the first layer, where raw data (such as images or text) is fed into the network.

- Hidden layers: These layers process the input data through various weights and activations. Deep learning models typically have several hidden layers, each learning increasingly abstract features from the data.

- Output layer: The final layer produces the output or prediction of the model, such as a class label for classification tasks.

2. Activation Functions

Activation functions are mathematical functions applied to the output of each node in a neural network. They introduce non-linearity to the model, allowing it to learn more complex patterns. Common activation functions include:

- Sigmoid: Outputs values between 0 and 1.

- ReLU (Rectified Linear Unit): Outputs values greater than or equal to zero.

- Tanh: Outputs values between -1 and 1.

ReLU has become one of the most popular activation functions due to its simplicity and effectiveness in training deep networks.

3. Backpropagation

Backpropagation is a key technique in training neural networks. It refers to the process of calculating the gradient of the loss function (a measure of error) with respect to each weight in the network and adjusting the weights in the direction that reduces the error. This process is done iteratively, often using optimization algorithms like stochastic gradient descent (SGD).

4. Training Deep Networks

Training a deep learning model involves feeding labeled data into the network and adjusting the weights through backpropagation to minimize the loss function. This process requires significant computational power, especially when training large models on vast datasets. It also involves hyperparameter tuning to achieve the best performance.

Types of Deep Learning Models

Deep learning is an umbrella term that covers a variety of architectures, each designed for different types of data and tasks. Here are some of the most important types:

1. Convolutional Neural Networks (CNNs)

CNNs are primarily used for image and video processing. They are designed to automatically detect spatial hierarchies in data by applying convolutional layers that filter the input data to extract local features. CNNs are used in applications like image classification, object detection, and facial recognition.

Key components of CNNs include:

- Convolutional layers: Apply filters to the input data to extract features like edges, textures, and shapes.

- Pooling layers: Reduce the dimensionality of the data, making the model more efficient.

- Fully connected layers: Used to make final predictions based on the learned features.

2. Recurrent Neural Networks (RNNs)

RNNs are designed for sequential data, such as time series, speech, and text. Unlike CNNs, which process data in fixed-sized chunks, RNNs have “memory” that allows them to retain information from previous inputs, making them ideal for tasks where context is important, like language translation and speech recognition.

A common variant of RNNs is the Long Short-Term Memory (LSTM) network, which mitigates the vanishing gradient problem, allowing the model to learn longer sequences.

3. Generative Adversarial Networks (GANs)

GANs consist of two neural networks: a generator and a discriminator. The generator creates fake data, while the discriminator tries to distinguish between real and fake data. Through this adversarial process, GANs can generate highly realistic data, such as images and videos, from random noise.

GANs are widely used for applications in image generation, video creation, and even drug discovery.

4. Transformers

Transformers are a type of model that has achieved great success in natural language processing (NLP). They use self-attention mechanisms to process all input data simultaneously, allowing the model to capture long-range dependencies more efficiently than RNNs. Models like GPT (Generative Pretrained Transformer) and BERT (Bidirectional Encoder Representations from Transformers) have revolutionized tasks like language translation, text summarization, and question-answering.

Applications of Deep Learning

Deep learning has found applications across various industries, making significant contributions to technology and society. Some of the most notable applications include:

1. Image and Video Recognition

Deep learning models, especially CNNs, have revolutionized image and video recognition tasks. From identifying objects in photos to analyzing medical images for signs of disease, deep learning has drastically improved accuracy and speed. Applications include:

- Face recognition

- Autonomous vehicles

- Medical imaging analysis

2. Natural Language Processing (NLP)

NLP is another area where deep learning has made tremendous strides. Models like BERT and GPT have enabled machines to understand and generate human language with a high level of sophistication. Applications in NLP include:

- Speech recognition

- Machine translation

- Chatbots and virtual assistants

- Text summarization

3. Speech Recognition and Synthesis

Deep learning has significantly advanced speech recognition systems, allowing machines to transcribe spoken language into text. It is also used in speech synthesis, where machines generate human-like speech from text. Applications include:

- Voice assistants (e.g., Siri, Alexa)

- Transcription services

- Real-time language translation

4. Healthcare and Drug Discovery

Deep learning models are being used to analyze vast amounts of medical data, from medical images to genetic data, to identify patterns that may be missed by humans. In drug discovery, deep learning models can predict molecular interactions and accelerate the process of finding new treatments.

5. Gaming and Entertainment

Deep learning has also been employed in the gaming and entertainment industries. AI models are used to create realistic characters, generate game environments, and improve player experiences. Deep learning techniques have also been used to create synthetic art and music.

Challenges and Future Directions

While deep learning has achieved remarkable success, it still faces several challenges:

- Data requirements: Deep learning models require large datasets for training, which may not always be available.

- Computational resources: Training deep models demands significant computational power, which can be expensive.

- Interpretability: Deep learning models are often considered “black boxes,” making it difficult to understand why they make certain decisions.

Looking forward, the future of deep learning holds enormous potential. Researchers are exploring more efficient models, methods for training with less data, and ways to make models more interpretable. With advancements in hardware, deep learning is likely to continue pushing the boundaries of what machines can accomplish.

Conclusion

Deep learning is transforming industries and reshaping how we interact with technology. By mimicking the brain’s ability to process complex data, deep learning models have achieved breakthrough performance in tasks like image recognition, natural language processing, and autonomous systems. As the field continues to evolve, it promises even greater advances, making it one of the most exciting areas in AI today.

Leave a Reply